Introduction

When I search for something on the internet, I often find that English content is much more comprehensive than French content.

Although it might seem obvious given the number of English speakers in the world compared to French speakers (about 4 to 5 times more), I wanted to test this hypothesis and quantify it.

TLDR: On average, an English article on Wikipedia contains 19% more information than its French counterpart.

The source code for this analysis is available here: https://github.com/jverneaut/wikipedia-analysis/

Protocole

Wikipedia is one of the largest sources of quality content on the web worldwide.

At the time of writing this article, the English version has over 6,700,000 unique articles compared to only 2,500,000 for the French version. We will use this corpus as the basis for our study.

Using the Monte Carlo method, we will sample random articles from Wikipedia for each language and calculate the average character length of this corpus. With a sufficiently large number of samples, we should obtain results close to reality.

Since the Wikimedia API does not provide a method to get the character length of an article, we will obtain this information as follows:

- Retrieve the byte size of a large sample of articles via the Wikimedia API.

- Estimate the number of bytes per character from a small sample of articles using the Monte Carlo method.

- Retrieve the character count of a large number of articles using the byte-per-character estimate obtained in step 2.

As we are using the Monte Carlo method to estimate bytes per character, we need the largest possible number of articles to minimize deviation from the actual number.

The Wikimedia API documentation specifies these limitations:

- No more than 500 random articles per request.

- No more than 50 article contents per request.

Taking these limitations into account and as a compromise between precision and query execution time, I chose to sample 100,000 articles per language as a reference for article byte length and 500 articles to estimate the bytes per character for each language.

Limitations

Currently, the Wikimedia API returns its own wikitext format when asked to provide the content of an article. This format is not plain text and is closer to HTML. Since all languages on Wikimedia use this same format, I estimated that we could rely on it without influencing the direction of our final results.

However, some languages are more verbose than others. For example, in French, we say "Comment ça va?" (15 characters) compared to "How are you?" (12 characters) in English. This study does not account for this phenomenon. If we wanted to address it, we could compare different translations of the same book corpus to establish a "density" correction variable for languages. In my research, I did not find any data that provides a ratio to apply to each language.

However, I did find a very interesting paper that compares the information density of 17 different languages and the speed at which they are spoken. Its conclusion is that the most "efficient" languages are spoken more slowly than the least efficient ones, resulting in a verbal information transmission rate consistently around ~39 bits per second.

Interesting.

Get the average byte length of articles in each language

As stated in the protocol, we will use a Wikipedia API to retrieve 500 random articles in a given language.

def getRandomArticlesUrl(locale):

return "https://" + locale + ".wikipedia.org/w/api.php?action=query&generator=random&grnlimit=500&grnnamespace=0&prop=info&format=json"

def getRandomArticles(locale):

url = getRandomArticlesUrl(locale)

response = requests.get(url)

return json.loads(response.content)["query"]["pages"]This then gives us a response like { "id1": { "title": "...", "length": 1234 }, "id2": { "title": "...", "length": 5678 }, ... } which we can use to retrieve the size in bytes of a large number of articles.

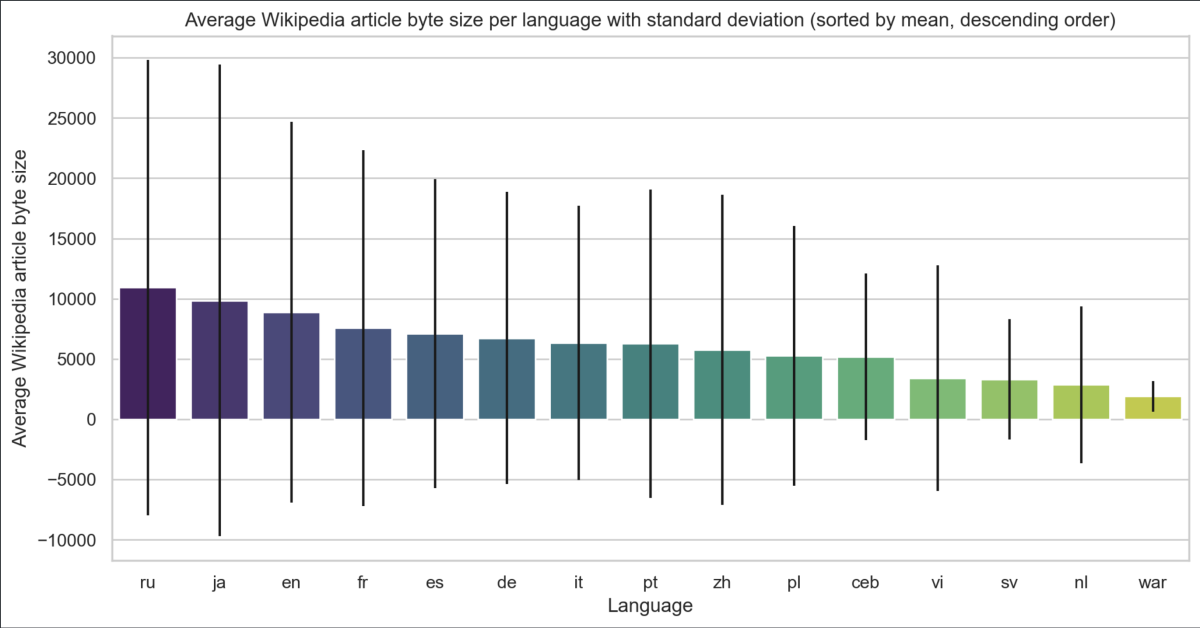

This data is then reworked to obtain the following table:

| Language | Average length | ... |

|---|---|---|

| EN | 8865.33259 | |

| FR | 7566.10867 | |

| RU | 10923.87673 | |

| JA | 9865.59485 | |

| ... |

At first glance, it would seem that articles in English have a larger byte length than those in French. Similarly, those in Russian have a larger byte length than those in any other language.

Should we stop at this conclusion? Not quite. Since the length reported by Wikipedia is a length in bytes, we need to delve a little deeper into how characters are encoded to understand these initial results.

How Letters Are Encoded: An Introduction to UTF-8

What is a byte?

Unlike you and me, a computer has no concept of letters, let alone an alphabet. For it, everything is represented as a sequence of 0s and 1s.

In our decimal system, we go from 0 to 1, then from 1 to 2, and so on, up to 10.

For the computer, which uses a binary system, we go from 0 to 1, then from 1 to 10, then from 10 to 11, 100, and so forth.

Here is a comparison table to make it clearer:

| Decimal | Binary |

|---|---|

| 0 | 0 |

| 1 | 1 |

| 2 | 10 |

| 3 | 11 |

| 4 | 100 |

| 5 | 101 |

| 6 | 110 |

| 7 | 111 |

| 8 | 1000 |

| 9 | 1001 |

| 10 | 1010 |

| ... |

Learning binary goes well beyond the scope of this article, but you can see that as the number becomes larger, its binary representation is "wider" compared to its decimal representation.

Since a computer needs to distinguish between numbers, it stores them in small packets of 8 units called bytes. A byte is composed of 8 bits, for example, 01001011.

How UTF-8 stores characters

We have seen how to store numbers, it gets a little more complicated for storing letters.

Our Latin alphabet used in many Western countries uses an alphabet of 26 letters. Couldn't we just use a reference table where each number from 0 to 25 corresponds to a letter?

| Letter | Index | Binary index |

|---|---|---|

| a | 0 | 00000000 |

| b | 1 | 00000001 |

| c | 2 | 00000010 |

| ... | ||

| z | 25 | 00011001 |

But we have more characters than just lowercase letters. In this simple sentence, we also have uppercase letters, commas, periods, etc. A standardized list was created to include all these characters within a single byte, known as the ASCII standard.

At the dawn of computing, ASCII was sufficient for basic uses. But what if we want to use other characters? How do we write with the Cyrillic alphabet (33 letters)? This is why the UTF-8 standard was created.

UTF-8 stands for Unicode (Universal Coded Character Set) Transformation Format - 8 bits. It is an encoding system that allows a computer to store characters using one or more bytes.

To indicate how many bytes are used for the data, the first bits of this encoding are used to signal this information.

| First UTF-8 bits | Number of bytes used |

|---|---|

| 0xxxxxx | 1 |

| 110xxxxx ... | 2 |

| 1110xxxx ... ... | 3 |

| 11110xxx ... ... ... | 4 |

The following bits also have their purpose, but once again, this goes beyond the scope of this article. Just note that, at a minimum, a single bit can be used as a signature in cases where our character fits within the x1111111 = 127 remaining possibilities.

For English, which does not use accents, we can assume that most characters in an article will be encoded this way, and therefore the average number of bytes per character should be close to 1.

For French, which uses accents, cedillas, etc., we assume that this number will be higher.

Finally, for languages with a more extensive alphabet, such as Russian and Japanese, we can expect a higher number of bytes, which provides a starting point for explaining the results obtained earlier.

Get the average character length in bytes of articles for each language

Now that we understand what the value returned earlier by the Wikipedia API means, we want to calculate the number of bytes per character for each language in order to adjust these results.

To do this, we use a different way of accessing the Wikipedia API that allows us to obtain both the content of the articles and their byte length.

Why not use this API directly? This API only returns 50 results per request, whereas the previous one returns 500. Therefore, in the same amount of time, we can get 10 times more results this way.

More concretely, if the API calls took 20 minutes with the first method, they would take 3 hours and 20 minutes with this approach.

def getRandomArticlesUrl(locale):

return "https://" + locale + ".wikipedia.org/w/api.php?action=query&generator=random&grnlimit=50&grnnamespace=0&prop=revisions&rvprop=content|size&format=json"

def getRandomArticles(locale):

url = getRandomArticlesUrl(locale)

response = requests.get(url)

return json.loads(response.content)["query"]["pages"]Once this data is synthesized, here is an excerpt of what we get:

| Language | Bytes per character | ... |

|---|---|---|

| EN | 1.006978892420735 | |

| FR | 1.0243214042939228 | |

| RU | 1.5362439940531318 | |

| JA | 1.843857157700553 | |

| ... |

So our intuition was correct: countries with a larger alphabet distort the data because of the way their content is stored.

We also see that French uses more bytes on average to store its characters than English as we previously assumed.

Results

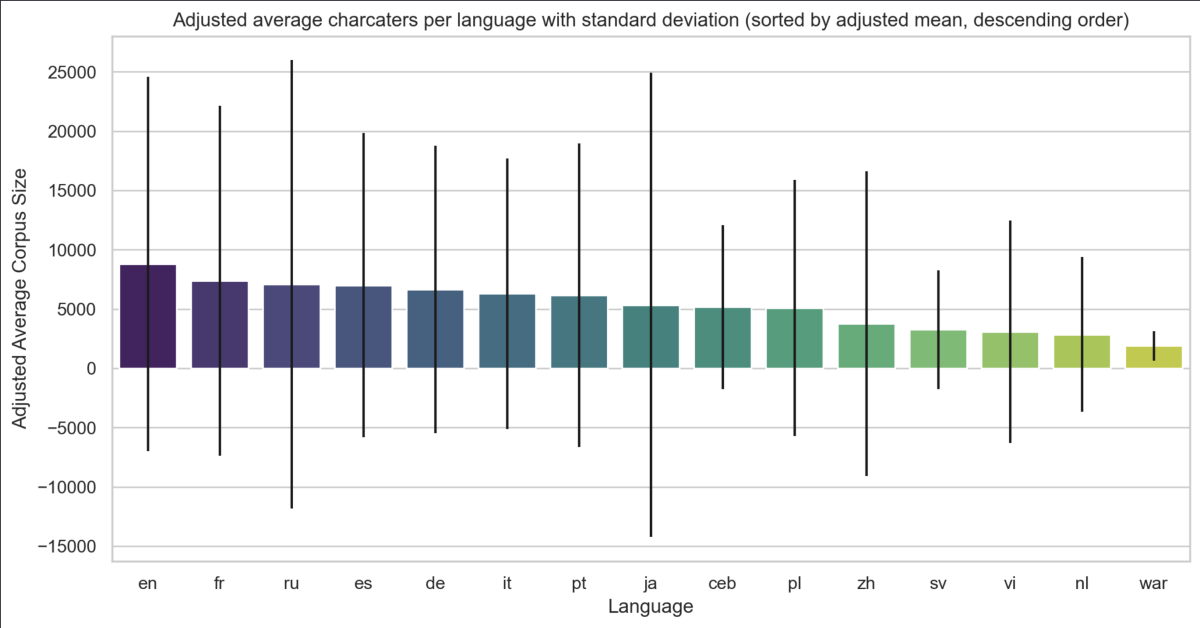

We can now correct the data by changing from a size in bytes to a size in characters which gives us the following graph:

Our hypothesis is therefore confirmed.

On average, English is the language with the most content per page on Wikipedia. It is followed by French, then Russian, Spanish, and German.

The standard deviation (shown with the black bars) is large for this dataset, which means that the content size varies greatly from the shortest to the longest article. Therefore, it is difficult to establish a general truth for all articles, but this trend still seems consistent with my personal experience of Wikipedia.

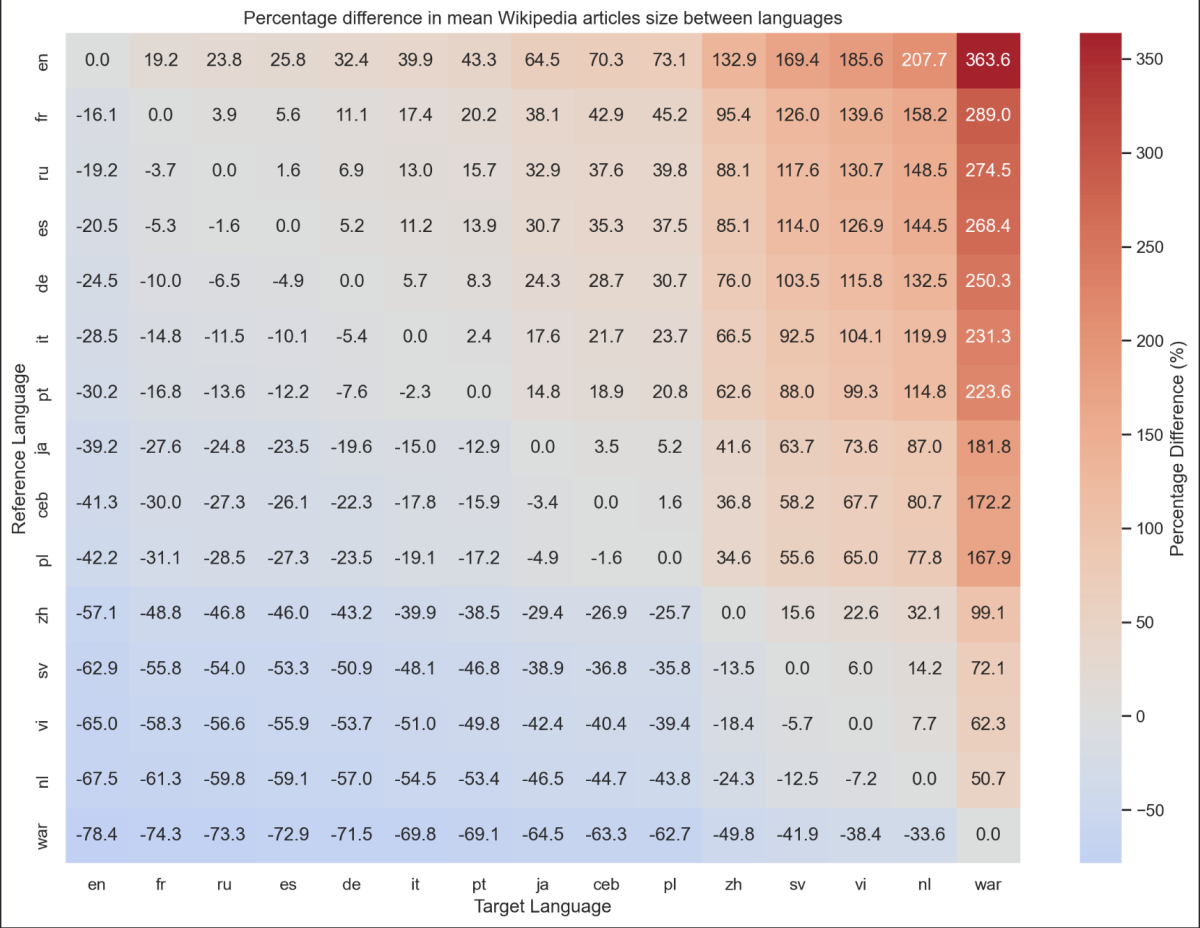

If you want all the results from this experiment, I have also created this representation, which compares each language with its percentage of additional/less content relative to the others.

Thanks to this, we therefore find our conclusion that on average, an English article on Wikipedia contains 19% more information than its equivalent in French.

The source code for this analysis is available here: https://github.com/jverneaut/wikipedia-analysis/